News

Etherscan Introduces Code Reader: The AI-Powered Tool For Ethereum Contract Analysis

The aforementioned tool provides users with the capability to access and comprehend the source code of a particular contract address through the assistance of an artificial intelligence prompt.

Etherscan, the Ethereum block explorer and analytics platform, recently introduced a novel tool called "Code Reader" on June 19. This tool employs artificial intelligence to retrieve and interpret the source code of a specific contract address. Upon receiving a prompt from the user, Code Reader generates a response using OpenAI's large language model, thereby furnishing valuable insights into the contract's source code files. The tutorial page of the tool states as such:

“To use the tool, you need a valid OpenAI API Key and sufficient OpenAI usage limits. This tool does not store your API keys.”

Code Reader's potential applications include acquiring a more profound comprehension of a contract's code through AI-generated explanations, obtaining comprehensive lists of smart contract functions associated with Ethereum data, and comprehending how the underlying contract interacts with decentralized applications. The tutorial page of the tool elaborates that upon retrieving the contract files, users can select a specific source code file to peruse. Furthermore, the source code can be modified directly within the UI before being shared with the AI.

In the midst of an AI boom, certain experts have raised concerns about the current feasibility of AI models. A report recently published by Singaporean venture capital firm Foresight Ventures states that "computing power resources will be the next big battlefield for the coming decade." However, despite the increasing demand for training large AI models using decentralized distributed computing power networks, researchers have identified significant limitations that current prototypes faces, such as complex data synchronization, network optimization, and concerns surrounding data privacy and security.

As an illustration, the aforementioned Foresight researchers highlighted that training a large model with 175 billion parameters utilizing single-precision floating-point representation would necessitate approximately 700 gigabytes. Nevertheless, distributed training mandates the frequent transmission and updating of these parameters between computing nodes. In the event of 100 computing nodes, and each node requiring updates for all parameters at every unit step, the model would require the transmission of 70 terabytes of data per second, which surpasses the capacity of most networks by a significant margin. The researchers concluded that:

“In most scenarios, small AI models are still a more feasible choice, and should not be overlooked too early in the tide of FOMO [fear of missing out] on large models.

Recommended to read

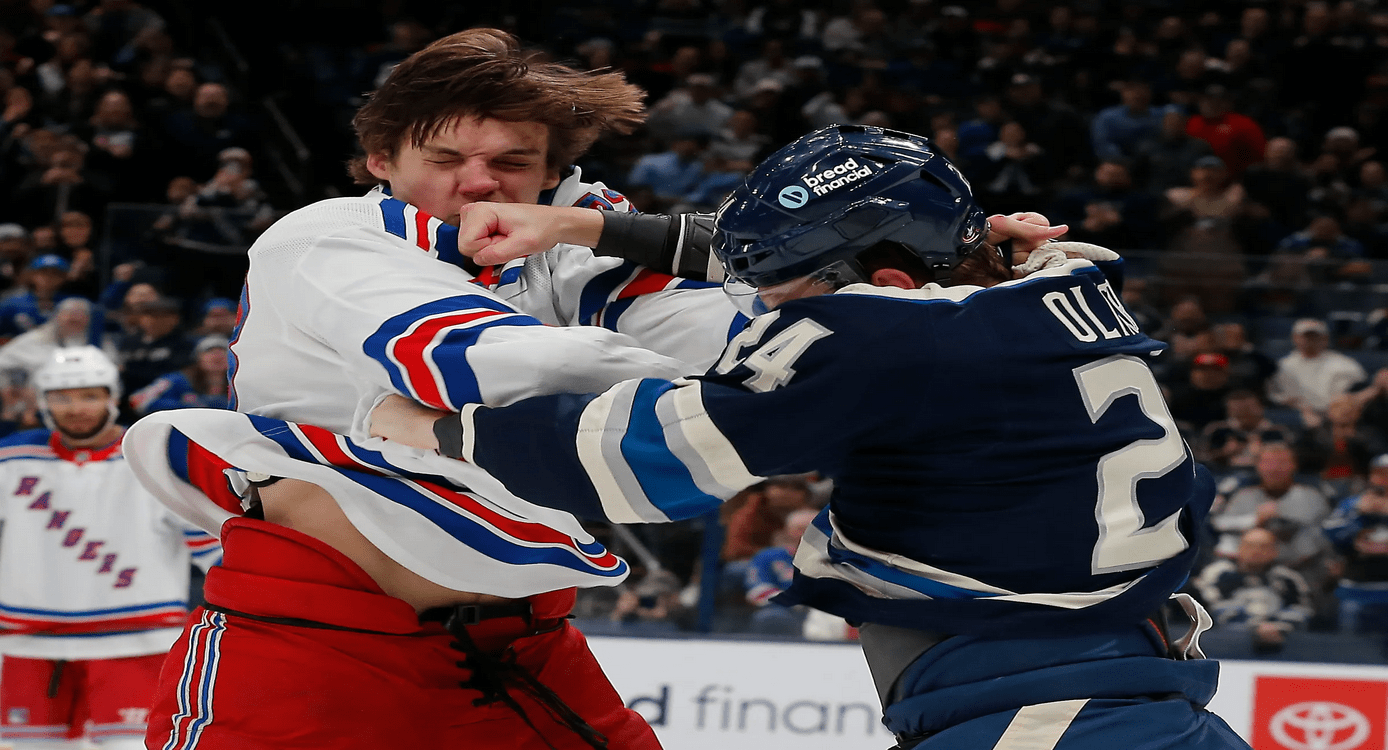

Rempe and Deslauriers Exchange Powerful Punches, Sparking A ContenderFor The 'Fight of the Year.'

Flyers' Nicolas Deslauriers engages in a fiery showdown with Rangers' Matt Rempe, reflecting the evolving dynamics of his season, marked by lineup unc...

Read more

Aoyama Gakuin University Rockets to Victory in Hakone Ekiden, Shattering Records Along the Way

Aoyama Gakuin University secured a historic victory in the 100th Tokyo-Hakone collegiate ekiden road relay, breaking records with a total time of 10 h...

Read more

Bitcoin Surges as Court Paves Way for First US Spot ETF

Crypto prices jumped after a US court ruling cleared obstacles for approving the nation's first spot Bitcoin exchange-traded fund, a long-awaited vict...

Read more

Get K8 Airdrop update!

Join our subscribers list to get latest news and updates about our promos delivered directly to your inbox.